Drone 3D Modeling and Tilt-Up Photography Tutorials

Drone Overview

Agriculture, forestry and plant protection

Agricultural plant protection drone refers to the unmanned aircraft used in agriculture and forestry plant protection operations, this drone by the flight platform (fixed-wing, single-doubt wing, multi-rotor), GPS flight control, wine-spraying mechanism is composed of three parts, through the ground remote control or GPS flight control to achieve the spraying operation, can spray pharmaceuticals, seeds, powders and so on. Agricultural plant protection drone has the following advantages compared with human work:

1. High safety, avoid pesticide poisoning

Artificial spraying of pesticides is very harmful to the operator, according to reliable sources, the number of people poisoned by pesticides each year about 10,000 people, the fatality rate of about 20%, pesticide residues and pollution caused by the number of casualties, there is no statistical data, plant protection machine can be operated remotely, to avoid spraying operators by the contamination of pesticides as well as threats to safeguard the safety of the operation of the spraying of pesticides.

2. Much more efficient than manual plant protection.

According to overseas data, the use of plant protection machine large-scale operation can reach 80 ~ 100 acres per hour (an acre is about 222 ping), this efficiency than conventional manual spraying at least 100 times higher. In addition, the plant protection machine operating height is low, the rotor generated by the downward airflow to accelerate the formation of aerosol flow, increasing the penetration of pesticide spray on crops, reducing the degree of pesticide loss, pesticide concentration and pesticide coverage are superior to the general artificial spraying, thus preventing and controlling the effect of better than traditional, but also reduce the pesticide pollution caused by the soil.

3. Saving resources and reducing costs

The agricultural plant protection drone spraying technology adopts the spraying method, which can save at least 50% of pesticide usage and 90% of water usage, greatly reducing the resource cost. Moreover, the drone has low depreciation rate, small oil consumption, low labor cost per unit operation and easy maintenance.

4. Flight control and navigation autonomous operation

The application of drone spraying technology is not subject to terrain and height restrictions, the use of long-distance remote control operation and flight control navigation autonomous operation function, only in the spraying operation before the crop in the farmland to collect relevant information, and the route planning, input into the ground station's internal control system, the ground station to the aircraft to give instructions, the aircraft can carry spraying device, autonomous spraying operations will be completed, and after the completion of the automatic flight! After completion, the aircraft will automatically fly back to the take-off point. At the same time when the aircraft spraying operation, the ground station's display interface can also be used to observe the progress of the spraying operation in real time.

Power Inspection

Power patrol drone power patrol refers to the use of drone equipped with cameras, infrared sensors and other equipment to check the high voltage power lines for poor contact, leakage, overheating, external damage and other potential hazards, see the table below. Compared with the traditional way of patrolling, the advantages of drone patrolling are:

1. Significant efficiency gains

The traditional patrol distance is long, the workload is large, walking patrol efficiency is very low, the use of drone patrol speed, high accuracy, patrol does not leave a dead corner, than manual patrol efficiency is tens of times higher.

2. High security

Ice, snow, earthquakes, landslides and other natural disasters and weather, as well as mountains, canyons, rivers and other complex geographic environments, so that the artificial patrol line has a high risk, always threatening the life safety of the patrol personnel. The use of drone patrol, not subject to the influence of meteorological and geographical conditions, can reduce the intensity of manual labor, reduce operational risks.

3. Provide more timely information

With the characteristics of fast patrol speed and quick response to emergencies, the drone is able to detect defects and provide information in time, which reduces the risk of high losses caused by line accidents.

| Type | labor | Manned helicopters | Drone |

| Inspection Fees | 3.5 million dollars | 26.25 million dollars | 5.25 million |

| Equipment Costs | About $250,000 for tools, vehicles, etc. | $20 million/frame | Two million dollars. |

| Full time of the inspection | One set takes 3500 days | One takes 90 days. | One set takes 525 days |

| Quality of Inspection | general | your (honorific) | Higher |

| safety | Climbing the tower, safety risks are high | There is a certain risk that an accident would be very dangerous. | No personal safety, some equipment risk |

| Features | Traditional technology, not too demanding | Helicopter visual inspection | Advanced technology, optional configurations |

| air traffic control restrictions | free from | restrict | partially restricted |

| (sth. or sb) else | Labor intensive, time-consuming and not very safe | Very costly, limited by ATC, complicated procedures | Lower cost and more flexibility |

forest fire prevention

The process and advantages in the field of drone forest fire prevention are as follows:

1. Quickly locate the fire point

Using the infrared and visible lenses on board the drone to monitor forest fires, the digital inspection and temperature measurement circuits are instantly transmitted to the ground control station, displaying the fire points and hot spots on the digital map of the ground station, and determining whether it is a fire point through the identification system, as well as carrying out accurate positioning of the fire point, providing the fire department with the geographic coordinates of the fire site (longitude and latitude) at the first time.

2. Rapid identification of fires

When the drone flies above the fire, it can also send the data of the fire's contour, area, and spreading speed back to the ground control center in real time, providing reliable information for the ground firefighting command, so that the firefighting command department can quickly and effectively organize and deploy firefighting teams, improve the efficiency of firefighting operations, and prevent injuries and deaths of firefighters.

3. Provide optimal evacuation routes for the fire service

The effective transmission of information not only enables the fire brigade to quickly deploy personnel to carry out fire-fighting work in key areas, but also notifies firefighters to evacuate from dangerous areas in a timely manner, and provides firefighters with the optimal evacuation path based on the image data of the fire scene. The patrol efficiency of the drone is much higher than that of the traditional manual patrol, and it can complete the patrol work which originally requires many people to carry out for many hours in a short period of time, and at the same time, the operation cost is also extremely low.

Disaster Relief

After the occurrence of a major natural disaster, in the case of information disruption and transportation obstruction, the use of drones can quickly cross the mountains and rivers, deep into the hinterland for filming and photography, to obtain the disaster situation, and provide accurate information for the rescue and relief work in a timely manner. Micro drone over-sensing system in the disaster relief work can play the following aspects of the role:

1. Changed the way of disaster site investigation

Microdrones can be used to survey the disaster scene from different angles, especially in specific disaster environment and geographical conditions (such as earthquakes, snowstorms, flash floods, etc.), when the staff cannot reach the scheduled survey site, they can use microdrones to quickly fly to the scene of the disaster area, quickly obtain the disaster situation, and provide assistance for the rescue decision-making.

2. Enhanced disaster site investigation capabilities

The micro drone has greatly enhanced the investigation capability of the disaster site, especially in the case of a wide disaster area and inconvenient transportation, it can use the levitated drone to quickly investigate the disaster situation in a larger area, so that the timeliness of the disaster relief work is greatly improved.

3. Provides more objective disaster information

The micro drone can provide the most objective disaster information in the disaster relief work. Through aerial photographs and videos, the disaster relief personnel can see higher, farther and wider at the scene of the disaster area, which excludes the occurrence of various subjective judgment situations, such as unclear expression of the information of the disaster situation of on-site investigation, conflicting opinions, and other people's doubts.

System Composition of Multi-rotor UAVs

Task Load System

The load capacity of a UAV is mainly determined by the various types of mission loads. The so-called UAV mission load refers to the instruments, equipment and subsystems loaded on the UAV platform to realize the specific mission to be accomplished by the UAV flight, or the payload of the UAV. The UAV is merely a platform and transportation vehicle for these devices. The mission payload includes sensors and equipment required to perform tasks such as transportation, but excludes avionics, data link equipment, and fuel.

The mission load system of a typical drone should include information acquisition system, information receiving and sending system, mission execution system, power supply system and auxiliary mechanism, etc. Some highly intelligent drones also include information analysis and decision-making system.

The rapid development of drone mission load has greatly expanded the application field of drone, and the mission load equipped on the drone is different according to its function and type. The mission loads are broadly categorized into drop class (rescue, wire-framing, pesticide spraying), acquisition class (atmospheric monitoring, sampling, emergency response, search and rescue, remote sensing), optoelectronic class (monitoring, surveillance), and other classes (communication, experiment, relay).

Optical Sensors

Optical camera is a kind of optical action imaging equipment, is also the earliest mounted on the drone to use the detection equipment, its biggest advantage is that it has a very high resolution, at present other imaging detectors have not been able to achieve, but its disadvantage is that it can not meet the needs of real-time intelligence.

Optical cameras work in the visible spectrum by rotating, zooming, and focusing an electronic device to produce images in the form of motion video, still pictures, or a combination of the two. Most small drones have optoelectronic cameras with narrow to medium viewing angle lenses. Optical sensors can perform a variety of tasks and can be used in combination with other different types of sensors to produce composite images. Optical cameras are mostly used during daylight hours to maximize image quality.

Infrared Sensors

Infrared camera is generally active infrared, the basic principle is to use ordinary CCD cameras can feel the spectral characteristics of infrared light (can receive visible light, can also receive infrared light), with infrared lamps as a "source of illumination" to achieve night vision imaging; thermal imaging camera is entirely passive infrared, the principle is to detect the target's own radiation emitted by the infrared, and through photoelectricity, signal processing and other means to convert the target object temperature distribution image into a video. The principle is to detect the infrared radiation emitted by the target itself, and through optoelectronic conversion, signal processing and other means, the temperature distribution image of the target object is converted into a movie.

There are two types of infrared cameras used in drones, namely, cooled and non-cooled. Modern cooling cameras are cooled by a cryogenic chiller, which reduces the sensor temperature to a low-temperature region. This system can operate in the mid-wave infrared band, which has a high thermal contrast. Cooling camera probes are usually housed in a vacuum-sealed case and require additional power for cooling. In general, cooling cameras produce higher quality images than non-cooling cameras. Uncooled camera sensors operate at or slightly below the ambient temperature and operate through changes in resistance, voltage or current generated when heated by detected infrared radiation. Uncooled sensors are designed to operate in the longwave infrared band from 7 to 14 nm. It is in this band that the ground temperature target radiates the most infrared energy.

An infrared line scanner (IRLS) is a thermal imaging device that uses scanning mirrors to collect ground infrared radiation and project it onto an infrared detector to form an infrared image signal. This infrared image signal can also be used to modulate a video channel, which is sent back to the ground receiving station through a data transmission system.

Forward-looking infrared instrument (FLIR) that is, thermal imager, using condensation focal plane array infrared detector, can be completed at once: imaging detection, integration, filtering and multiplexing functions. This all-solid-state infrared imager is not only small in size, light in weight and high in reliability, but also has higher sensitivity and resolution as well as longer range than scanning. This is obviously more favorable to the drone to carry out monitoring and surveillance tasks.

Laser Sensors

Drone optical access is an emerging technology. As an effective loading device for drones, optical radar transforms the real information of the scene into point cloud images, which can display and restore the scene situation intuitively. Laser Sensors

The principle of laser radar is similar to that of radar. The technology used in laser radar is to calculate the relative distance between the target and the sensor based on the return time of the laser after it encounters an obstacle. The laser beams accurately measure the relative distance between the contour edges of objects in the field of view and the sensor. This contour information forms a so-called point cloud and produces a 3D map of the environment with an accuracy of centimeters, thus increasing the accuracy of the measurements. As the "eye of the machine", laser radar has been used in a large number of drone applications.

Laser radar also has unique advantages, such as higher distance resolution, angular resolution, speed resolution, a wide range of speed detection, access to a variety of images of the target, strong interference resistance, but also has a smaller and lighter than the microwave radar and other advantages.

A laser indicator utilizes a laser beam to illuminate a target, which emits a non-visually encoded pulse that is reflected back from the target and received by a receiver. However, this method of illuminating the target with a laser indicator has certain drawbacks, such as poor laser accuracy if the atmosphere is not sufficiently transparent (e.g. rain, clouds, dust or smoke). In addition, the laser may be absorbed by a special coating, or may not be reflected correctly, or may not be emitted at all (e.g., when shining on glass).

Synthetic Aperture Radar (SAR) Sensors

Synthetic Aperture Radar (SAR) is a radar that utilizes the relative motion of the radar and the target to synthesize the real antenna aperture of a smaller size into an equivalent antenna aperture of a larger size by using data processing, also known as Composite Aperture Radar (CAR). Conventional drones have been using small and lightweight electro-optical imaging detection equipment, the value of which has been proven for surveillance and target interception missions, but their shortcomings lie in the short detection range and the inability to measure distance due to the limitation of meteorological conditions of clouds, rain, and snow, which are precisely the strengths of airborne radar. However, since radars are usually large in size, weight and power consumption, few drones can afford them, and it is currently believed that the direction of development for active imaging and detection equipment for drones is synthetic aperture radar (SAR).

Synthetic aperture radar breaks through the general radar due to the antenna length and wavelength limitations make the resolution is not high shortcomings, it adopts side-view antenna array, the use of the carrier forward movement of the Doppler effect, so that multi-array synthesized antenna array beam sharpening, so as to improve the resolution of the radar. It is characterized by high resolution, all-weather operation, and effective identification of camouflage and penetration of masks, and the resulting high azimuthal resolution is equivalent to the azimuthal resolution that a large aperture antenna can provide.

Multi-spectral camera sensors

Multi-spectral camera is developed on the basis of ordinary aerial camera. Multi-spectral photography refers to the expansion of visible light to the infrared and ultraviolet light in two directions, and through a variety of filters or beam splitters and a variety of light-sensitive device combinations, so that the same target at the same time, respectively, to receive the same target in different narrow spectral bands of radiation or reflection of the information, you can get the target of several different spectral bands of the photo. Multi-spectral camera can be divided into three types: the first is a multi-mirror type multi-spectral camera. It has 4-9 lenses, each lens has a filter, respectively, to allow a narrower spectrum of light through, multiple lenses at the same time to take pictures of the same scene, and at the same time to obtain different spectral bands of image information. The second type is the multi-phase machine type multi-spectrum camera. It is a combination of several cameras, each camera with different filters, respectively, to receive the scene of different spectral bands of information, each obtained a set of specific spectral bands of film. The third is the beam separation type multi-spectral camera. It uses a lens to photograph the scene, with multiple prisms to separate the light from the scene into a number of bands of light beams, respectively, the band of light information recorded. Among these three types of multispectral cameras, the advantage of beam splitter is simple structure, high image overlapping accuracy, but poor image quality; multi-mirror and multi-phase camera types are also difficult to accurately align the same place, overlapping accuracy is poor, and the image quality is also poor. Multi-spectral imaging instrument mostly belongs to the passive work, according to its different ways of working can be divided into two categories of optical imaging and scanning imaging. Optical imaging has split-type multi-spectral camera, panoramic camera, slit-type camera. Scanning imaging has optical scanner, imaging spectrometer, imaging polarizer, etc..

Multi-spectral Imaging Spectroscopy (MIS) is the technique of splitting the incident full-band or broad-band optical signal into several narrow-band beams and then imaging them separately on the corresponding detectors, thus obtaining images in different spectral bands. In practice, in order to more effectively extract the target features and identification, the detection system needs to have a fine spectral resolution, it is required to divide the spectrum into a narrower and the use of each band, and to accomplish this task is the imaging spectroscopy technology.

High Spectrum Camera Sensor

Hyperspectral imaging technology is based on the very many narrow-band image data technology, which combines the imaging technology and spectral technology to explore the two-dimensional geospatial and one-dimensional spectral information of the target, and obtain the continuous, narrow-band image data with high spectral resolution. At present, the development of high spectral imaging technology is rapid, and the common ones include grating spectroscopy, acousto-optic tunable filter spectroscopy, prism spectroscopy, and chip coating.

The hyperspectral camera acquires image data in the visible, near-infrared, mid-infrared, and thermal-infrared bands of the electromagnetic spectrum in a number of very narrow spectral continuums. The hyperspectral image is finely segmented in the spectral dimension, not only in the traditional so-called black, white, or R, G, B, but also in the spectral dimension of N channels, for example, 400 ~ 1000 mm is divided into 300 channels. Therefore, through the high spectral equipment to obtain a data cube, not only the image information, but also in the spectral dimension of the expansion, the result can not only obtain the image of each point of the spectral data, but also can obtain any of the spectral band of image information.

At present, the development of the drone load to diversification, so that the drone has more mission capabilities to cope with various types of complex situations, the miniaturization of the load. So that the internal space of the body on the one hand, can be loaded with more mission loads, on the other hand, can increase the size of the battery or generator power. This further improves the performance of the drone. Modularized design of load. This allows the drone to quickly re-install various sensors when performing different tasks or upgrading sensors, which can meet the needs of performing various specific tasks faster. Integration of multiple task loads, integrating multiple task loads together, so that the use of a single task load is susceptible to sound, light, electricity, atmospheric and other influences is greatly reduced, improving the quality of completed tasks. With the rapid development of computer and automatic control technology, the drone is no longer the traditional meaning of unmanned aerial vehicle, but to have a higher degree of autonomy of the air robot.

Fundamentals of Photogrammetry

Introduction to Photogrammetry

Photogrammetry is the information science of acquiring, processing, extracting, and presenting results of information through the study of images.

Photogrammetry is a subspecialty of surveying and mapping, and its basic task is to rigorously establish a geometrical relationship between a photographic instantaneous image and the corresponding target. Once this correspondence is correctly restored, information about the target can be rigorously derived from the image.

The main task of photogrammetry is to map various scales of topographic maps and establish digital ground models to provide basic data for various geographic information and land information systems. Photogrammetry to solve two major problems are geometric positioning and image interpretation, geometric positioning is to determine the size, shape and spatial location of the object being photographed, image interpretation is to determine the nature of the image corresponding features.

Vertical photography means that the main optical axis of the camera is approximately perpendicular to the ground during the photographic moment, and the angle between the image plate deviation range is less than 3°. Since the main optical axis and the image of the flat relationship between the perpendicular, so the main optical axis and the angle of the misalignment line is called the image of the angle of inclination, as shown in Figure 5-1.

However, for low altitude photography, the angle of inclination of the image film is generally not more than 5°, and the maximum angle is not more than 12°, and the number of films exceeding 8° should not be more than 10% of the total number of films; and the number of films exceeding 10° should not be more than 10% of the total number of films in the special geographic area is generally not more than 8°, and the maximum angle is not more than 15°.

Classification of Photogrammetry

Classified according to the different imaging distances

- Aerospace Photogrammetry (Remote Sensing)

Sensors are mounted on spacecraft or satellites with a photographic distance of 240~350km, and are mainly used in satellite remote sensing images for mapping topographic maps or thematic maps, or for quickly extracting the required spatial information. - Aerial Photogrammetry

The sensor is mounted on the air plane or aircraft, and the distance of photography is 500~8000m, which is the main method to produce various kinds of small and medium scale topographic maps by photogrammetry. - Low Altitude Photogrammetry

Sensor mounted on the drone, photography distance of 50 ~ 500m, is the production of a variety of large-scale topographic maps of the main method, but also commonly used in small areas of engineering mapping and remedial aerial photography vulnerability. - Close-up Photogrammetry

The camera distance is less than 50m and is mainly used for specific vertical targets rather than terrain target measurement.

Classification by use

- The main task of topographic photogrammetry is to map the national basic scale topographic maps, as well as various thematic maps required by the departments of townships, agriculture, forestry, geology, transportation, engineering, resources and planning, etc., to establish topographic databases and to provide three-dimensional basic data for various geographic information systems. The application scenarios of drone photogrammetry usually belong to topographic photogrammetry.

- Non-topographic photogrammetry is mainly used to solve the photogrammetric method of resource investigation, deformation observation, environmental monitoring, military reconnaissance, ballistic trajectory, demolition, as well as industrial, architectural, archaeological, geological engineering, biological and medical aspects of scientific and technical problems. The objects and tasks vary greatly, but the main method is the same as that of topographic photogrammetry, i.e., to reconstruct a three-dimensional model from a two-dimensional image, and to extract the required information from the reconstructed three-dimensional model.

Photogrammetric tasks

The main task of photogrammetric surveying is to observe the ground, based on which topographic maps and thematic maps of various scales are mapped, topographic map databases are established, and all kinds of basic data required for the establishment and updating of geographic information systems are stored.

In addition, photogrammetry is also widely used in the field of non-terrain mapping, such as the monitoring of hazardous sites such as blasting, high temperature and vacuum.

Advantages of photogrammetry

Advantages of photogrammetry over conventional measurements include:

- The object of image recording is objective, rich in information, and clear in image, so people can get the geometric or physical information they need more conveniently, and it has an outstanding advantage to use the image information as the basis for making maps.

- Photogrammetry does not need to contact the target object to be measured, so the measurement operation is not subject to the constraints of the conditions of the work site. For example, the monitoring of landslides, mudslides and other geological disasters are dangerous, it is impossible for people to go to the scene for on-site observation, then the application of photogrammetric means is particularly important.

- Photogrammetry can be used to map dynamic changes or moving targets. The image record is a true response to the state of the target object at a certain moment, so photogrammetry can be used to study the dynamic target. And this study is overall, comprehensive, simultaneous, rather than local, one-sided, time-lagged. For example: the study of liquids, gases and other moving non-fixed target can be applied photogrammetric techniques.

- Photogrammetry can be used to map complex targets. The application of theodolites in mapping mountainous terrain can be very difficult, and the loss or absence of key features can affect the accuracy of the maps when collecting them.

- Image data can be reused and preserved permanently. A piece of image data reflects the landform condition of a place objectively and in detail, and becomes an important data for recording local information. By comparing the image data of different periods, we can study the characteristics of the landform change and the development pattern of the place.

Stages of Photogrammetry Development

Photogrammetry has gone through three stages of development: analog photogrammetry, analytical photogrammetry and digital photogrammetry.

- Analog Photogrammetry (1900-1960)

In the middle of the 19th century, Lauserta marked the birth of photogrammetry by using photographic photographs to make a map of Vincennes Castle. At that time, the graphical method was used to map point-by-point objects. Later appeared using optical projectors, mechanical projectors or optical - mechanical projectors to simulate the photographic process, using them to meet the spatial location of the target to be photographed, this type of photogrammetric equipment is called analog photogrammetric equipment. - Analyzing photogrammetry (1956-1980)

With the emergence and development of electronic computers and automatic control technology and analog-digital conversion and other technologies, the previous analog photogrammetric equipment for certain improvements, the use of digital projection to replace the physical projection, the use of computer-controlled measurement, interpretation of the image of the point corresponding to the ground point of the coordinates and mapping solution. This type of computer-assisted manual operation of photogrammetry is called analytical photogrammetry. - Digital Photogrammetry (1980-present)

Digital photogrammetry is based on digital images, analyzed and processed by electronic computers to determine the shape, size, spatial location of the object being photographed and its nature of the technology, digital photogrammetry has all-digital characteristics. A continuous image can be defined as a set of discrete two-dimensional grayscale matrix, each matrix element of the row and column number on behalf of the position of the matrix in the photo, the value of the element is the grayscale of the image, the matrix elements in the image of a small area of 13 μm x 13 μm, 25 μm × 25 μm, 50 μm × 50 μm, etc., called the image element (pixel).

There are two ways of acquiring digital images: one is directly acquired by remote sensors during photography, the other is acquired through digital scanning of photographs, the acquired digital image pre-processing, so that it is suitable for reading and measurement, and then in the digital photography measurement system for image matching and measurement, in order to obtain a variety of digital results, which can be exported as graphic images, but also can be directly applied. Digital photogrammetry is very applicable, can handle aerial photographs, close-up photographic photographs and other kinds of data, the ability to establish and update the map database to provide data for the production of digital models, digital earth, digital photogrammetry is one of the important means of geographic information systems to obtain ground data.

Digital photogrammetry and analog photogrammetry, analytical photogrammetry, the biggest difference between digital photogrammetry processing of the original data is a digital image or digital image, digital photogrammetry is ultimately a computer vision instead of human stereo observation, digital photogrammetry using only a general-purpose computer and its corresponding external equipment, the product is a digital form of the traditional product is only the analog output of the digital product. The product is in digital form, while the traditional product is only the analog output of the digital product.

Inclination Photogrammetry

Concepts of Inclination Photogrammetry

Inclined camera technology is a new technology developed in recent years in the field of mapping, traditional aerial photography can only shoot features from a vertical angle, while inclined camera measurement subverts the limitations of the previous orthophoto shooting from a vertical angle, through the same platform mounted on multiple sensors, and at the same time, from multiple angles to collect images, and obtain a wealth of high-resolution texture on the top surface of the building and the side view. It can not only truly reflect the condition of features and obtain texture information with high precision, but also generate a real three-dimensional city model through advanced positioning, fusion, modeling and other technologies, which effectively compensates for the shortcomings of traditional aerial photography.

Features of Tilt Camera Measurement

Inclined camera survey not only can truly reflect the condition of features, but also can be embedded with precise geographic information and rich image information through advanced positioning technology, which greatly improves the application of aerial camera survey scene. The application of oblique image technology makes the current high cost of 3D city modeling significantly reduced, greatly improving the speed of 3D city modeling. The technical solution for generating 3D models using oblique photogrammetry has the following advantages:

- High accuracy and high resolution. The tilt camera platform is mounted on a low-altitude aircraft and can acquire vertical and oblique images with high resolution at the centimeter level.

- Rich texture information of features. Oblique photography from a number of different angles to collect images, can obtain more realistic and rich texture information on the side of the feature, to make up for the orthophoto can only obtain the texture of the top surface of the feature is insufficient.

- Realistic 3D Spatial Scene. Realistic 3D scenes constructed from images not only have accurate geo-location and co-ordinate information of the features, but can also represent detailed features of the features, including projecting roofs and facades, as well as topographical features, etc., for a 720° dead-angle view of the model.

Photographic scale

Due to the inclination of the photo and the undulation of the terrain makes the image point displacement, so the photographic scale in the aerial photographs will be different photographic scale is the photo as a horizontal photo, the ground to take the average elevation, the photo of a line segment I and the corresponding line on the ground of the horizontal distance L ratio, can also be used as an aerial camera's main distance f and the ratio of the air altitude H. The photographer can also take the photo of a line segment I and the ground to take the average elevation of the horizontal distance L ratio.

1/m=I/L=f/H

Altitude refers to the altitude of an aircraft relative to a water surface at the instant of photography. Depending on the datum taken, the altitude can be categorized into relative altitude and absolute altitude.

Relative Altitude (Photographic Altitude)

Aerial photography aircraft in the instantaneous photography relative to the height of a water level surface, it is with the average elevation of the ground in the aerial photography area with respect to the design of the altitude of the datum, is to determine the basic data of the aerial aircraft aircraft flight, generally H = mf calculated to get.

Absolute Air H(Absolute)

Aerial photography aircraft relative to the mean sea level altitude, is the real altitude of the photographic object in the instant of photography, through the relative altitude H and the average height of the ground in the photographic area H (ground) calculated.

H(absolute)=H+H(ground)

Selection of Photographic Scale

The choice of photographic scale should be based on factors such as the scale of the mapping, the internal mapping method of the photographic survey area and the accuracy of the mapping to consider the photographic scale, in addition to the economy and the usability of the photographic data.

The larger the scale of photography, the higher the ground resolution of the photo, the more favorable the interpretation of the image and improve the accuracy of mapping, but the scale of photography is too large, the cost and workload will increase, so according to the accuracy requirements of topographic mapping and the need to obtain ground information, according to the mapping specifications for the use of the scale of photography.

When the selected camera and photography scale, aerial photography should be calculated according to the altitude H = mf flight, in order to get the corresponding aerial photographs. However, due to the influence of air currents and other factors, so that the camera aircraft altitude changes, the same track on the neighboring photographs of the altitude difference should not be greater than 30m, the maximum altitude and the minimum altitude difference should not be greater than 50m, the actual altitude and the design of the altitude difference should not be greater than 50m.

Photo overlap

For the purpose of three-dimensional mapping and the edges between routes, a certain amount of overlap between photographs is required, including both heading overlap and longitudinal overlap. The overlap of two neighboring photographs within the same route is called the heading overlap, and the percentage of the heading overlap for the whole frame length is called the heading overlap degree. The overlap between two adjacent images within the same route is called vertical overlap, and the percentage of the vertical overlap in relation to the length of the entire frame is called the vertical overlap degree.

Heading Overlap Px% = Px / Lx × 100%

Longitudinal overlap Py% = Py / Ly × 100%

where Lx, Ly denote the length of the image frame; Px, Py denote the length of the overlapping image portion in both the navigational and longitudinal directions.

The overlapping part of the photo is a necessary condition for stereo mapping and photo connection, in addition to heading overlap and longitudinal overlap, in the direction of the route must be three neighboring photos have a public overlap image, called three degrees of superposition, which is a photogrammetric survey of the requirements of the selected control point. Due to the edge of the photo part of the image masking degree of poor, will affect the accuracy of the measurement, so three degrees of overlap in the 1, 3 photos of the overlap part can not be too small. When the ground is undulating, the overlap should be large in order to maintain three-dimensional measurement and splicing of photos. Therefore, in general, the overlap degree of heading should be 60%~80%, and the minimum should not be less than 53%, and the overlap degree of longitudinal should be 15%~60%, and it should not be less than 8%.

Photogrammetry Products

DOM (Digital Orthophoto)

Digital Orthophoto Map (DOM) is based on aerial photographs or remote sensing images (monochrome/color), scanned and processed by image-by-image radiometric correction, differential correction and tessellation, and cropped into image data according to the scope of topographic maps, and the information of topographic elements is filled into the image plane in the form of symbols, line drawings, annotations, kilometer grids, and map contours (inside/outside) decoration, etc. to form an image database stored in the form of raster data. The information of terrain elements is filled into the image plane in the form of symbols, line drawings, annotations, kilometer grids, map contours (inside/outside decorations, etc.) to form the image database stored in raster data form. It has the geometric accuracy and image characteristics of a topographic map.

- Image Characteristics

Digital Orthophoto is an image with both map geometric accuracy and image features, DOM has the advantages of high accuracy, rich information, intuitive and realistic, fast acquisition, etc. It can be used as the background control information for map analysis, and can also be used to extract the historical information or the latest information of natural resources and socio-economic development, which can provide a reliable basis for the prevention and control of disasters and public facilities construction planning, and it can also be used to extract and generate new information, realizing the revision and updating of maps. It can also extract and derive new information from it to realize the revision and updating of the map, and the accuracy, realism and completeness of other data have been evaluated in practice. - Production Method

Due to the different data sources used to obtain the orthophotos, as well as the differences in technical conditions and equipment, there are several ways to produce digital orthophotos, including the following three main ones:- Full Digital Photography Measurement Methods

Through the digital photogrammetric system to realize, that is, the digital image for internal orientation, phase orientation, absolute orientation, the formation of the DEM (digital elevation model), according to the inverse solution method to do the unit numerical differential correction, a single piece of orthophoto inlay, and finally according to the contour line cropping to get a digital orthophoto map, and place name notation, kilometer grid and contour decoration, etc., after the modification of the map, drawing into the DOM to save. After modification, a DOM is drawn and saved. - Monolithic Digital Differential Correction

If DEM data and image control results are already available in an area, the resultant data DOM can be used directly. The main process is to scan the aerial negative, orient it in the digital image according to the coordinates of the control points, and then correct it with digital differentiation from the DEM results. - Orthophoto Scanning

If there is an orthophoto made by optical projection, the optical orthophoto can be scanned and digitized directly, and then geometrically corrected to obtain the data of the digital orthophoto. Geometric correction is a direct digital simulation of scanning transformations, the overall deformation process of the scanned image can be viewed as the result of a combination of basic transformations such as translation, scaling, rotation, affine, deflection, bending and so on.

- Full Digital Photography Measurement Methods

DEM (Digital Elevation Model)

Digital Elevation Model (DEM) is a data set of plane coordinates (x, y) and their elevations (z) of regular grid points within a certain range, which mainly describes the spatial distribution of regional geomorphic patterns, and is formed by data collection (including sampling and measurement) through contour lines or similar three-dimensional models, and then interpolating the data.

DEM is a kind of physical ground model that represents ground elevation in the form of a set of ordered numerical arrays, which is a branch of digital terrain model (DTM), from which other kinds of terrain features can be derived. It is generally believed that DTM describes the spatial distribution of linear and nonlinear combinations of various geomorphic factors including elevation, such as slope, slope direction, slope change rate, etc., where DEM is a single digital geomorphic model, and other geomorphic features, such as slope, slope direction, slope change rate, etc., can be derived on the basis of DEM.

- Establishment methodology

There are various ways to build a DEM, including from data sources and acquisition methods:- Measured directly from the ground, the instruments involved are components such as leveling guides, survey pins and relative elevation survey plates, or high-end instruments such as GPS, total stations and field surveys can be used.

- Based on aerial or aerospace imagery, obtained through photogrammetric means, such as elevation coordinate observation and air-triangulation, analytical mapping, digital photogrammetry, etc.

- The topographic maps are extracted from existing maps, such as grid reading, hand-held tracking with digitizers, and semi-automatic extraction with scanners, and then DEMs are generated by interpolation.

- Calculation method

The calculation method includes two algorithms, regular network structure and irregular triangular network (TIN). The commonly used algorithm is TIN, which is built by contour lines and elevation points, and then DEM is built by linear and bilinear interpolation on the basis of TIN.

TIN structural data can describe surface morphology at different levels of resolution. Compared with grid data models, TIN models can represent more complex surfaces more accurately in less space and time at a given fractional rate, especially when the terrain contains a large number of features such as fault lines and structural lines, which can be better taken into account in TIN models. - Branching rate

The resolution of a DEM is the length of the smallest cell of the DEM. The resolution of a DEM is an important indicator of the accuracy of a DEM's terrain depiction, and a major factor in determining its range of use. Since the DEM is discrete data, the (X, Y) coordinates are actually small squares, each of which is labeled with its elevation. The edge length of this small square is the resolution of the DEM. The smaller the resolution value, the higher the resolution, the more accurate the terrain is depicted, and the amount of data increases geometrically. Therefore, the DEM should be created according to the need to make a balance between the accuracy and the amount of data. - use

Since the DEM describes the ground elevation information, it has a wide range of applications in mapping, hydrology, meteorology, geomorphology, geology, soil, engineering construction, communications, military and other national economy and national defense construction, as well as in the field of humanities and natural sciences. For example, in engineering construction, can be used in such as earthwork calculation, visual analysis, etc.; in flood prevention and mitigation, DEM is for hydrological analysis, such as catchment analysis, water system network analysis, rainfall analysis, flood storage calculation, inundation analysis, etc.; in the wireless communication, can be used for cellular telephone base station analysis. - Ground resolution (GSD) and model accuracy

Ground resolution, also known as Ground sample distance (GSD), is the size (m) of the ground represented by a pixel, the value of which is related to the height of the photograph and the focal length of the camera. The ground resolution formula is as follows (unit: meter/pixel):

Ground Resolution = Sensor Size x Height / (Focal Length × Maximum Image Size)

By using the above formula, we can also perform the flight altitude calculation. Taking the DJI Elite Phantom 4 RTK as an example, the size of the sensor is 13.2mm × 8.8mm, the size of the image frame is 5472 × 3648, and the size of the pixel is 2.41μm, i.e. 13.2mm / 5472 = 2.41228μm. The focal length of the camera is 8.8mm, and substituting into the formula, we can obtain the result that H ≈ 36.5 x GSD, and the flight altitude obtained by calculation is about 182m, taking into account the environmental impacts during flight, which is usually lower than the calculated flight altitude. If the required GSD is 5cm/ pixel, the calculated flight altitude is about 182 m. Considering the environmental impacts during flight, the flight altitude will be lower than the calculated flight altitude, so we can choose a flight altitude of 150 m to make sure that the data obtained meets the requirements.

The model accuracy is usually the difference between the median error calculated by measuring the position of a point on the ground with an instrument in the field and the position of the point captured on the model. The modeling accuracy for oblique photography is generally three times the resolution of the photograph, that is, three times the ground resolution of the orthophoto generated from the photograph. If the resolution of the generated orthophoto is 2cm/pixel, then the modeling accuracy is basically 5 ~ 10cm. - image resolution

Image resolution is the reflection of ground resolution on specific images at different scales. Image resolution varies with the scale of the image. Pixels and resolution are directly proportional, the more pixels, the higher the resolution. Pixels are divided into effective pixels and CCD/CMOS pixels. 5 megapixel digital cameras have a maximum image resolution of 2592 pixels x 1944 pixels, while 8 megapixel digital cameras have a maximum image resolution of 3264 pixels x 2448 pixels. As you can see, the more pixels, the higher the maximum output image resolution.

Execution planning for oblique photography missions

The external work of tiltrotor photography is divided into the following three parts:

- Documentation and program preparation

Mainly include: collecting project information, mastering the specific situation of the project, completing the airspace application and technical program development. - Organization and implementation of aerial photography

Mainly includes: image control point layout and measurement, organization and implementation of external flight operation plan. - Data collation and inspection

Mainly includes: flight data organization and inspection, aerial image data classification and quality inspection work.

Pre-Operation Preparation

Network RTK Signal Coverage Inquiry

Take DJI series of UAVs as an example, you can check the network RTK coverage of the mission area in the GS RTK APP.

Device Firmware Update Check

Update the firmware of the drone device before departure to avoid wasting the shooting time due to updating the firmware during the execution of the mission. Note that the drone battery should be upgraded piece by piece.

Collection of corresponding mapping data for the survey area

Including existing topographic map data, aerial images, existing control point results, etc., which is conducive to optimizing the design of the survey area and improving work efficiency.

Cache Task Area Map

Open GS RTK APP to cache the mission area map to avoid unnecessary impact on route planning and flight safety due to the lack of network in the mission area.

Advance planning of mission areas

By using GS RTK APP or Google Earth to create KML files of the survey area, we can plan the mission route in advance and save the time for on-site mission planning.

Segmentation of Task Areas

It is recommended to divide the larger mission area city by 1km × 0.5km for 100m speed 7m/s mission; 1km × 1km for 150m speed 11m/s mission; 1km × 1.5km for 200m speed 13m/s mission; and set the take-off point in the middle of the divided area, which will ensure the stability of the signal transmission. This will ensure the stability of signal transmission.

Enhance the signal strength of the drone and remote control.

Distance, physical obstacles (large buildings, hills, trees) and electromagnetic interference (e.g., power lines, reinforced concrete or large metal objects) are the most common reasons for disconnecting the drone from the remote control signal. In order to avoid this situation, it is recommended to take off in the mission area as far as possible and at the high point of the mission area, the favorable position can increase the transmission distance of the signal; according to the position of the drone, adjust the direction of the antenna, and keep the antenna always facing towards the drone; avoid the source of interference, and the flight path is far away from the high voltage line tower, and so on, and avoid more than 5 drones flying together in the same area as far as possible; avoid the drone out of the sight line, and if the flight mission must be flown with the drone, it is recommended that the antenna should be kept away from the high voltage line tower, and the antenna should be kept away from the high voltage line tower, and so on. If you must fly the drone out of sight, please observe the flight parameters of the aircraft at all times and keep track of the nose direction of the aircraft.

Technical Design Solutions

Basic requirements and technical basis of the project

- Define the basic requirements of the project: the implementation of the project is strictly based on the technical requirements provided by the owner for the design and organization. The content includes: aerial photography, image control measurement, DOM production, oblique photography model production, and finally submit the data results that meet the specifications and technical requirements.

- Clearly define the requirements of the work period: the date of completion of the preliminary plan and formalities; the date of commencement and completion of aerial photography, and the date of submission of data results.

- Clarify the mathematical basis of the technical program: coordinate system, elevation datum.

- Specify the requirements of the result format: original aerial image format, pos data file, image control points, inclined 3D model resolution, plane accuracy, result format, etc.

- Identify the referenced standards and the basis of operation in the scheme design.

Design Implementation Plan

Inclined Photography Program Design

The selection of the tilt camera system is mainly based on the technical requirements of the top sight, the scope and characteristics of the survey area, and is mainly composed of three parts. The first part is the flight platform; the second part is the personnel, i.e. the ground commanders, including the drone pilots and the ground workstation flight controllers; the third part is the camera equipment, i.e. the lens platform and the camera.

- Route Planning and Design

The laying of external routes is particularly important to ensure that the acquired images meet the requirements of the mapping industry, and to minimize the number of flights in the process of acquiring images of large areas to reduce the waste of human and material resources. Route design is the key to produce high quality image maps. The flight path needs to be designed according to the topography of the survey area, and must provide sufficient overlap rate for the production of orthophoto maps for the industry. Therefore, the design of the drone flight path needs to take into account all the factors to ensure the safety of the flight and the acquisition of images to meet the requirements.

Factors affecting drone route design include: topography of the survey area, weather factors, flight safety, resolution and overlap rate. The main parameters of the route design include: ground resolution, flight speed, image overlap rate, altitude, PTZ inclination angle and phase size.

Under normal circumstances, the ideal route design generally has the following characteristics:- The shape of the survey area is regular and the heading is basically in one direction.

- The heading altitude is the same.

- The ground overlap rates are generally: 75% for heading overlap and 50% for side overlap.

- There are no difficult terrain features such as forests, snow, water, etc. in the survey area because the images cannot be matched well under the coverage of these features.

The following points should be noted when laying routes for complex terrain projects: - Notes on flight line design for difficult terrain. When encountering difficult terrain, a high overlap rate of flight paths is required to ensure complete modeling.

The design of this type of route needs to be noted that: (i) the ground overlap rate is: heading overlap rate of 80%, side overlap rate of 60%; (ii) if the model to be matched is less than 95% completeness (e.g., fewer than 95% photographs for comparison and use in model construction), this would indicate that the overlap rate is unsatisfactory. - Attention to the design of the route of the strip features. When encountering the strip survey area including railroad, highway and river, etc., it is necessary to pay attention to the following when designing the route: ① When designing the route for the strip area, it is necessary to design the route as a double-track route, and the more the routes, the more reliable the result is if the conditions are allowed. ②When designing the route for strip features, the control points are not necessary, but having the control points is also helpful to improve the positional accuracy and integrity of the model. ③When designing the course of a strip survey area, it is not recommended to design the course as a single track, which may lead to the distortion and incompleteness of the model. ④If we are limited by the conditions, we can only design a single track route, the control point is necessary, with the control point can avoid the model distortion and deformation. ⑤ The strip survey area needs high overlap rate: it is better to set the heading overlap rate of 85% and the side overlap rate of 60%.

- Attention to multi-area route design. For multi-area routes, it is necessary to pay attention to the following when designing routes: ① In order to construct a good model, the design of routes requires sufficient overlap between two routes, the minimum overlap between two routes depends on the terrain conditions, the ideal terrain conditions for the heading overlap rate of 75%, the bypass overlap rate of 50%; complex terrain conditions for the heading overlap rate of 85%, the bypass overlap rate of 60%. Under ideal terrain conditions, it is 75% and 50%; under complex terrain conditions, it is 85% and 60%. ③ Multi-area routes should be designed to minimize environmental changes (e.g., light, weather conditions, moving objects, new buildings, etc.) within the routes.

- Height and flight speed determination

- Altitude Calculation: The altitude of oblique photography depends on the ground resolution (GSD) of the project and the size of the sensor pixels used, and is calculated according to the following equation

a/GSD=f/h>>>h=fGSD/a

formula

h-relative flight altitude;

f-lens focal length;

a-Image size;

GSD - Ground Split Rate.

The lower the flight altitude, the smaller the value of ground resolution, the higher the clarity of the image Figure 5-6 Relationship between altitude and ground resolution, but at the same time, the building shadows will be stretched, and the number of images will increase in the same measurement area, which is not conducive to the later data processing, and the safety coefficient of the flight will be reduced, and it should be considered in a comprehensive manner. - Calculation of flight speed: The drone does not stop flying during the image taking process, and there is a certain time interval for camera exposure. During this time period of camera exposure, the distance of the ground point movement in the image caused by the drone's flight is called the image point displacement. The factors affecting the image point displacement are the drone flight speed, the time interval between camera exposures, and the ground resolution, and the specific relationships are as follows:

δ = vt/GSD

formula

δ a displacement of the image point in pixels;

ν-a flight speed, unit is m/s:

t a exposure time in s;

GSD ground resolution in meters. - Overlap Rate Setting: The general provisions of the heading overlap rate of 60%, at least not less than 53%, when the ground is more undulating, should also increase the overlap rate. With the application of aviation digital camera, now the low-altitude photography measurement, especially the inclined photography measurement of the heading overlap rate has been more than 70%, the side overlap rate is also greater than 60%. the higher the overlap rate, the neighboring two images of the same name on the feature point is also the more, the more the number of matching points of the same name, the higher the accuracy of the positioning, but the increase in overlap rate will lead to the number of images, increase the workload of the internal data processing. However, an increase in the overlap rate will lead to an increase in the number of images, which will increase the workload of internal data processing. In order to take into account the positioning accuracy and operational efficiency, the image overlap rate is usually set to 80% for the heading overlap rate and 70% for the side overlap rate.

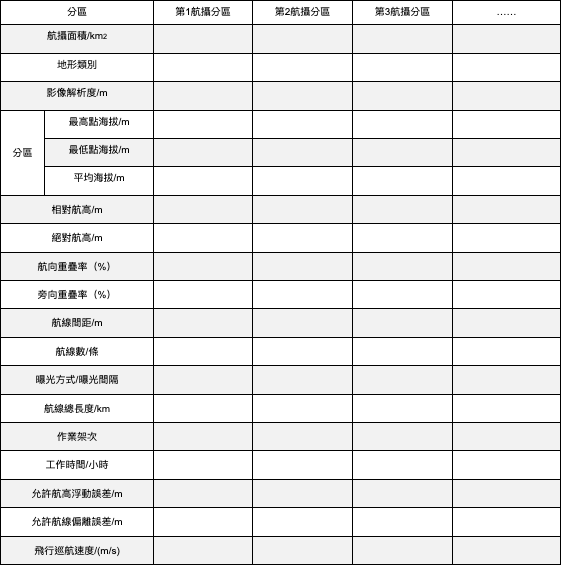

- Parameter design of the aerial photography factor, see the following table

- Altitude Calculation: The altitude of oblique photography depends on the ground resolution (GSD) of the project and the size of the sensor pixels used, and is calculated according to the following equation

Drone Aerial Photography

Gathering Equipment Inspection

- Flight Parameter Setting: Set flight speed, flight altitude, rudder overlap rate, side overlap rate, etc. with reference to the size of the flying area, the flying altitude permitted by the flying environment, and the accuracy requirement.

- Flight safety analysis: After plotting the route area, check the relative position and elevation difference of each part of the area in Google Earth to analyze the safety of the planned flight area. Then, we conduct a site survey to find a suitable landing site for the aircraft and pay attention to whether there are any unsafe factors such as high mountains, tall buildings, and electric towers in the route area to determine the flight altitude.

- Camera Check: As a tilted image acquisition device, the camera's sensitivity, focal length, and shutter speed need to be adjusted to the appropriate parameter values according to the task requirements and weather conditions, and the parameters of each camera need to be consistent. After setting the camera parameters, fix the camera in the PTZ and make sure the camera is turned on and the camera cover is removed.

- Drone check: Use the compass to check the direction of the nose of the drone and compare it with the compass parameters in the flight control software to make sure the direction in the flight control software is correct. Then turn on the power supply of the aircraft and test the working status of the PTZ with the remote control.

- First flight joint debugging: operators and equipment to the site in the official shooting before the arrangement of a test flight, the purpose of the test flight is to digital aerial camera, exposure combinations, the crew and aerial photography staff to cooperate with the performance of the aircraft camera window and flight organization and coordination of the various aspects of the test, to ensure the smooth progress of the aerial photography project. Before takeoff, the basic procedures for aerial camera inspection, such as camera mounts, lenses, flight control systems and directional system power check to ensure that the electrical circuit, mechanical transmission parts, flight management software, data hard disk recording work properly, the equipment settings parameters are normal and error-free. After the first flight, the photographer will immediately check whether the image is clear and whether the saved data is complete. The quality control personnel should immediately feedback the quality inspection results to the operation site and put forward rationalization suggestions to improve the flight and image quality. If no problems are found in the first flight, a follow-up flight can be arranged.

Drone Aerial Photography

According to the zonal aerial photography plan, find a suitable take-off point and take photos of each area. After checking the equipment and confirming that the takeoff area is safe, the drone is unlocked for takeoff. During takeoff, the pilot controls the aircraft in real time through the remote control, and the ground station flight controller observes the aircraft's status through the parameters transmitted from the aircraft. After the aircraft reaches a safe altitude, the pilot takes up the landing gear through the remote control and switches the flight mode to the automatic mission flight mode. At the same time, the pilot needs to keep an eye on the aircraft's dynamics through the visual surveillance drone, and the ground station flight controllers pay attention to the battery status, flight speed, flight altitude, flight attitude, and completion of the flight line in the flight control software to ensure flight safety.

After completing the flight, make sure the landing site is safe and avoid passers-by. After landing, check whether the image data in the camera and the data in the flight control system are complete. After the data acquisition is completed, the quality of the acquired image should be checked, and the unqualified areas should be compensated until the quality of the acquired image meets the requirements. For the dead space of the building and the ground that cannot be captured by the rotating lens. This leads to problems such as distortion, stretching and missing models and maps, so it is recommended that the side information of the building be captured in order to further improve the quality of the model.

Image control point placement and measurement

Field control points are the basis for encrypting control points and mapping in the aerial photography industry, which are mainly divided into three types: plane control points, elevation control points and level control points. Plane control points only determine the plane coordinates of the point, elevation control points only determine the elevation of the point, and leveling control points to determine the plane coordinates and elevation of the point. Like the layout of the control point program and the free grid leveling accuracy, reasonable layout of the image control point can effectively weaken the error transfer so that the error is reduced and evenly distributed, and thus appears to be the most important.

- Principle of Image Control Points

The selection and deployment of image control points are not only related to the deployment plan, but also consider the quality of the image, whether the color difference with the nearby is obvious, whether it is easy to distinguish on the image, and also consider whether there is signal interference and other issues.

The following factors should be taken into consideration when placing control points:- The image control point targets are of suitable size and are usually placed in relatively flat areas, at the corners of the ground marking patterns or at the corners of objects. It is easy to find and locate the points, and should be clear and easy to be recognized on the image.

- In order to minimize the effect of projection difference on the accuracy of the image matching result, the image control point should be located at 1~1.5cm from the image boundary.

- Since shadows can make the image control point target unclear on the image, you should avoid shaded areas when laying out the image control points in the field.

- The image control point should be chosen in a spacious place, avoiding TV towers, signal towers, large water areas and other places that interfere with the signal to avoid affecting the measurement accuracy of the image control point.

- The image control points should be arranged in such a way that they can be shared, usually within a 5 or 6-slice overlap, more than 150 pixels from the edges of the image, and more than 1 mm from the various markings on the image.The image control points around the area grid should be able to control the area to be measured.

- The image control points should be near the centerline of the side overlap, if the side overlap rate is too small, then it is necessary to spread the points separately, but the vertical distance split by the control area should be less than 2cm. when the side overlap rate is too small, the image control points of the neighboring lanes can not be shared, they should be spread separately, and when the side overlap rate is too large, the points of the neighboring lanes can not be shared, they should also be spread separately.

- When the image control point is a flat elevation point, the field selection should choose the obvious feature point with clear image, such as the intersection point of close to the linear features, the feature corner point, etc. The field recognition error is less than 0.1mm on the map, and when the image control point is an elevation point, it should prioritize the selection of the feature target point which has not much change in the local elevation, and should not select the stabbing point and the image control point in the curved feature and the slope with big change in the elevation.

- When decorating a control point, label the corresponding control point position on the image with the point name or point number, and record a detailed description of the location of the stabbing point in a notebook, which should be precise, and the point map and description must be consistent.

- Distribution of image control points

Since the oblique photography data contains IMU data, and taking into account the topographic factors of the survey area, the general method is to set up point groups in the corners of the survey area, uniformly around the perimeter and a small number of points in the interior: i.e., the image control points are set up in the form of point groups in the corners of the survey area, and uniformly around the perimeter and a small number of points in the interior. - External image control point marking method

In order to ensure the accuracy of the final results of the data, the need to set up the image control point marking before shooting, marking the materials used are generally paint or grease powder, the way of laying points generally use the "cross" or "L" shape. It should be noted that in the use of "cross" shaped layout, in order to facilitate puncture and ensure the accuracy of puncture, should be sprayed in the center of the word "cross" a small circle with a diameter of 5cm. When the image control point is chosen in the concrete road, asphalt road and other places where the ground is relatively hard, generally use paint to do the image control point marking; when the image control point is chosen in the soil is relatively soft, generally use grease powder to do the image control point marking. - Image Control Point Measurement

- Accuracy requirements: the error in plane and elevation of the image control points should not exceed 20cm.

- Selection of image control points: clear images of obvious features should be selected, the intersection of the intersection of good linear features, the top of the folded corners of obvious features, such as the corners of the flat-roofed houses, the top corners of the walls, the top corners of the door tender, the top corners of the doors, as well as other piers and columns that are convenient for measuring height and distance, etc., and the corners of the houses of the human characters and the irregularities in the changes of the local elevation are not suitable for selection.

- Image Control Point Measurement: It can be obtained by using the root control results of the topographic map of the surveyed area and using total station measurement.

- Image Control Points Decoration: On the original image data, use photoshop software to add decoration information on the image data, and the decorated file is saved with the name of image control points as the file name. Outside the industry can take on-site photos to assist the industry to quickly determine the point.

- The results are organized, like the control point coordinates results are recommended to be created with Excel software and sorted according to the priority of the control point numbers.

Aerial data collation

Since the amount of data used in tilt photography survey is very large, the image data, POS information, and image control data in a project should be categorized and managed, and the related parts should be examined for logical errors (i.e., the correspondence of information such as quantity, number, time, etc.). In data organization, the main tasks include:

- Images are placed in accordance with the frame and lens, first of all, according to the photo shooting time to establish the "date - frame" as the name of the directory, and then according to the lens of the positive, before, after, left, right into five directions to establish its corresponding subdirectory (in order to facilitate the software traversal, the usual use of the English O, B, H, L, R), due to digital cameras, the image recording habit is in order from 0001 to 9999 for a folder for storage, so the number of images over 10,000 when there will be more than one folder. As the digital camera image recording habit is in order from 0001 to 9999 for a folder for storage, so in the number of images more than 10,000 when there will be a number of folders, the need to be in accordance with the schedule of re-attributed to a folder and then renamed in accordance with the chronological order.

- POS data organization, POS data should correspond to the image one by one, because the null-three algorithm of oblique photogrammetry has the highest priority for ortho-matching, so usually it is only necessary to organize the ortho image, and the POS data can be associated with the name of the ortho image, and even be made into a Txt file or an Excel table according to the requirements of the processing software.

- Image control data collation, according to the actual route and image control point coordinates on the ground, find 5 or more orthophotos containing the image control point coordinates, and mark (circle in red) the area of image control points on the map, and then summarize with the data of the image control point coordinates and the photographs taken on the ground during the field work.

Aircraft Quality Control

The quality control of tilt camera model production is consistent throughout the whole life cycle of data processing, through the use of scientific methods and a series of practical and effective means, in the data acquisition stage, focusing on the key issues of data quality, in order to control the accuracy, effect adjustment and error correction, in order to ensure the quality of the model.

Pre-flight Quality Control

Strictly in accordance with the requirements of the technical specifications of the aerial photography of the survey and mapping, to eliminate quality accidents, so that the product of the various technical indicators to achieve excellence.

- Equipment Inspection

Before takeoff, all the equipment on board should be detected and all the equipment should be in normal working condition before the aircraft takes off. - navigation

All aerial photography flights use CPS system navigation, strictly according to the design of the GPS navigation data to implement aerial photography flight. During the flight, we always check the working condition of the GPS navigator to prevent the GPS satellites from losing lock and causing the GPS navigation to be ineffective. - aerial heights

Flying at the design altitude, the altitude difference between neighboring images on the same flight line shall not be more than 20m, the maximum and minimum altitude difference shall not be more than 30m, and the difference between the actual altitude and the design altitude shall be less than 5% of the design altitude.

Principles for Handling Special Flight Situations

- Crossing the route

In principle, one or two traversing routes are required to cross each adjacent parallel route vertically in order to ensure the accuracy of the connection of the routes in the later data processing. - small cross

For each sortie in the flight process, in order to carry out accurate calibration of the data of this sortie, i.e., before or after the official route data collection of each sortie, a calibration field flight, i.e., a small cross flight, is carried out. - Replacement Fly

In the process of data acquisition, there may be a very small number of anomalies, resulting in the lack of data acquisition in a certain area, etc. The flight routes with data loopholes have to be replenished, and both ends of the replenished flight routes have to be extended by a certain portion accordingly, so as to make the data obtained in the two times can be well connected. - Data Abnormalities

In the process of flight, there may be a very small number of satellite signal transient lockout phenomenon, or due to poor flight conditions, such as higher winds or updrafts, etc. caused by faster changes in aircraft attitude when the satellite signal is not good, for this situation caused by some abnormalities in the POS data, should be based on the time period of data anomalies in the official flight line and the accuracy of the entire sortie's POS data to determine the validity of the route data, the invalid data will be made up for the route or sortie. The invalid data of the route or the flight will be compensated.

Flight Program Design

- When it is convenient for the application of photo control points and does not affect the normal encryption in the industry, the coverage of not less than 15% of the image width beyond the boundary line of the photographic area in the side direction and at least one baseline beyond the boundary line of the photographic area in the heading direction can be regarded as satisfactory.

- The data of heading and side reproduction rate are better than 70%, the maximum image point displacement is not more than 1.5 pixels, and the rotation angle is generally not more than 15°, and the maximum is not more than 25° under the premise that the heading and side reproduction rate of the image film meet the requirements.

- The number of photographs reaching or approaching the maximum deflection limit on a flight path shall not be more than three consecutively; and the number of photographs with maximum deflection in a photograph area shall not exceed 4% of the total number of photographs in the area.